Abstract Classes ®

Question:-1(a)

Design and develop an efficient algorithm to find the list of prime numbers in the range 501 to 2000. What is the complexity of this algorithm?

Answer:

🔍 Sieve of Eratosthenes Algorithm for Primes (501 to 2000)

🧠 Step 1: Algorithm Design

- Create a boolean list

is_prime[0..2000], initialize all entries asTrue. - Mark

is_prime[0]andis_prime[1]asFalse. - For

i=2 i = 2 sqrt2000~~44 \sqrt{2000} \approx 44 - If

is_prime[i]isTrue, mark all multiples ofi i i^(2) i^2 False.

- If

- Extract all primes between 501 and 2000 where

is_prime[i]isTrue.

⚙️ Step 2: Pseudocode

n = 2000

is_prime = [True] * (n+1)

is_prime[0] = False

is_prime[1] = False

for i from 2 to int(sqrt(n)) + 1:

if is_prime[i] is True:

for j from i*i to n, step i:

is_prime[j] = False

primes = []

for k from 501 to n:

if is_prime[k]:

primes.append(k)

⏱️ Step 3: Complexity Analysis

- Time Complexity:

O(n log log n) O(n \log \log n)

(Efficient due to inner loop marking multiples only for primes) - Space Complexity:

O(n) O(n)

(For the boolean list of size 2001)

✅ Why Efficient?

- Eliminates multiples of each prime starting from

i^(2) i^2 - Optimal for fixed ranges like 501–2000.

🧾 Example Output (First 5 primes in range):

- 503, 509, 521, 523, 541...

🎯 Conclusion:

Sieve of Eratosthenes is optimal for generating primes in a contiguous range due to its near-linear time complexity.

Sieve of Eratosthenes is optimal for generating primes in a contiguous range due to its near-linear time complexity.

Question:-1(b)

Differentiate between Cubic-time and Factorial-time algorithms. Give example of one algorithm each for these two running times.

Answer:

⏱️ Cubic-Time vs. Factorial-Time Algorithms

🧊 Cubic-Time Algorithms

- Time Complexity:

O(n^(3)) O(n^3) - Description: Running time grows proportional to the cube of the input size.

- Example:

3 nested loops that each iteraten n for i in range(n): for j in range(n): for k in range(n): # constant-time operationn xx n n \times n

🧮 Factorial-Time Algorithms

- Time Complexity:

O(n!) O(n!) - Description: Running time grows factorial with input size (extremely fast).

- Example:

Generating all permutations ofn n def generate_permutations(arr, start=0): if start == len(arr): print(arr) for i in range(start, len(arr)): swap(arr, start, i) generate_permutations(arr, start+1) swap(arr, start, i) # backtrack

📊 Key Differences:

| Aspect | Cubic-Time ( | Factorial-Time ( |

|---|---|---|

| Growth Rate | Moderate | Explosive |

| Feasibility | Practical for small | Impractical even for small |

| Use Case | Matrix operations | Combinatorial problems |

Question:-1(c)

Write an algorithm to multiply two square matrices of order n xx n n \times n

Answer:

🔢 Algorithm for Matrix Multiplication (n × n)

Input: Two matrices A A B B n xx n n \times n

Output: Product matrixC=A xx B C = A \times B n xx n n \times n

Output: Product matrix

📝 Algorithm Steps:

- Initialize a result matrix

C C n xx n n \times n - For each row

i i n-1 n-1 - For each column

j j n-1 n-1 - Set

C[i][j]=0 C[i][j] = 0 - For each index

k k n-1 n-1 C[i][j]+=A[i][k]xx B[k][j] C[i][j] += A[i][k] \times B[k][j]

- Set

- For each column

- Return

C C

🧮 Example:

Let A=[[a,b],[c,d]] A = \begin{bmatrix} a & b \\ c & d \end{bmatrix} B=[[e,f],[g,h]] B = \begin{bmatrix} e & f \\ g & h \end{bmatrix}

ThenC=[[ae+bg,af+bh],[ce+dg,cf+dh]] C = \begin{bmatrix} ae+bg & af+bh \\ ce+dg & cf+dh \end{bmatrix}

Then

⏱️ Time Complexity:

- 3 nested loops, each running

n n - Total operations:

n xx n xx n=n^(3) n \times n \times n = n^3 - Time Complexity:

O(n^(3)) O(n^3)

Question:-1(d)

What are asymptotic bounds for analysis of efficiency of algorithms? Why are asymptotic bounds used? What are their shortcomings? Explain the Big O and Big Theta \Theta Theta \Theta f(n)=100n^(4)+1000n^(3)+100000 f(n) = 100n^4 + 1000n^3 + 100000

Answer:

📈 Asymptotic Bounds for Algorithm Efficiency

🔍 What are Asymptotic Bounds?

Asymptotic bounds describe how an algorithm’s resource usage (time or space) grows as the input size n n

🎯 Why Use Asymptotic Bounds?

- Simplification: Ignore constants and focus on growth rate.

- Comparison: Easily compare algorithms based on scalability.

- Predictive: Help estimate performance for large inputs.

⚠️ Shortcomings:

- Ignore constants: May mislead for small inputs (e.g.,

O(n^(2)) O(n^2) O(n) O(n) n n - Not exact: Do not give actual running time, only growth trend.

- Worst-case focus: Some bounds (e.g., Big O) often describe worst-case, not average or best-case.

📊 Big O and Big Theta \Theta

- Big O (

O O

Example:f(n)=O(g(n)) f(n) = O(g(n)) f f g g - Big Theta (

Theta \Theta

Example:f(n)=Theta(g(n)) f(n) = \Theta(g(n)) f f g g

📉 Diagram Concept:

Growth Rate:

^

| Big O: f(n) ≤ c·g(n) (above curve)

| Big Θ: c₁·g(n) ≤ f(n) ≤ c₂·g(n) (sandwiched)

|

|----------------------------------> n

🧮 For f(n)=100n^(4)+1000n^(3)+100000 f(n) = 100n^4 + 1000n^3 + 100000

- Dominant term:

100n^(4) 100n^4 - Big O:

O(n^(4)) O(n^4) f(n) <= c*n^(4) f(n) \leq c \cdot n^4 c=1000 c = 1000 n >= n_(0) n \geq n_0 - Big Θ:

Theta(n^(4)) \Theta(n^4) c_(1)n^(4) <= f(n) <= c_(2)n^(4) c_1 n^4 \leq f(n) \leq c_2 n^4 c_(1)=100 c_1 = 100 c_(2)=1000 c_2 = 1000 n >= n_(0) n \geq n_0

✅ Final Notations:

- Big O:

O(n^(4)) O(n^4) - Big Θ:

Theta(n^(4)) \Theta(n^4)

Question:-1(e)

Write and explain the Left to Right binary exponentiation algorithm. Demonstrate the use of this algorithm to compute the value of 3^(29) 3^{29}

Answer:

🔢 Left-to-Right Binary Exponentiation Algorithm

This algorithm efficiently computes a^(n) a^n n n

📝 Algorithm Steps:

- Convert

n n b b - Initialize result

r=1 r = 1 - For each binary digit

b_(i) b_i r=r xx r r = r \times r - If

b_(i)=1 b_i = 1 r=r xx a r = r \times a

- Return

r r

🧮 Compute 3^(29) 3^{29}

- Binary of 29:

11101 11101 - Steps:

| Step | Bit | Operation | Value |

|---|---|---|---|

| 1 | 1 | 3 | |

| 2 | 1 | 27 | |

| 3 | 1 | 2187 | |

| 4 | 0 | 4782969 | |

| 5 | 1 | 68630377364883 |

✅ Result: 3^(29)=68630377364883 3^{29} = 68630377364883

⏱️ Worst-Case Complexity:

- Number of bits:

|__log_(2)n __|+1 \lfloor \log_2 n \rfloor + 1 - Each step requires a square (and sometimes a multiply).

- Total operations:

O(log n) O(\log n)

This is efficient for large exponents! 🚀

Question:-1(f)

Write and explain the Bubble sort algorithm. Discuss its best and worst-case time complexity.

Answer:

🔁 Bubble Sort Algorithm

Bubble sort is a simple sorting algorithm that repeatedly steps through the list, compares adjacent elements, and swaps them if they are in the wrong order. The pass through the list is repeated until the list is sorted.

Bubble sort is a simple sorting algorithm that repeatedly steps through the list, compares adjacent elements, and swaps them if they are in the wrong order. The pass through the list is repeated until the list is sorted.

📝 Algorithm Steps:

- Start with an unsorted list of

n n - For each pass from

i=0 i = 0 n-1 n-1 - For each element from

j=0 j = 0 n-i-1 n-i-1 - Compare

arr[j] arr[j] arr[j+1] arr[j+1] - If

arr[j] > arr[j+1] arr[j] > arr[j+1]

- Compare

- For each element from

- Repeat until no swaps are needed in a full pass.

⚡ Example:

Sort: [5, 2, 9, 1]

Sort: [5, 2, 9, 1]

- Pass 1: [2, 5, 1, 9]

- Pass 2: [2, 1, 5, 9]

- Pass 3: [1, 2, 5, 9] → Sorted

⏱️ Time Complexity:

- Worst-case (reverse sorted):

O(n^(2)) O(n^2) - Best-case (already sorted):

O(n) O(n) - Average-case:

O(n^(2)) O(n^2)

Question:-1(g)

What are the uses of recurrence relations? Solve the following recurrence relations using the Master’s method:

Answer:

📈 Uses of Recurrence Relations

- Analyzing algorithm efficiency (e.g., divide-and-conquer algorithms).

- Modeling problems in computer science, engineering, and mathematics.

- Predicting time/space complexity of recursive functions.

🧮 Master’s Method

The Master Theorem solves recurrences of the form:

Compare f(n) f(n) n^(log _(b)a) n^{\log_b a}

🔹 (a) T(n)=4T((n)/(4))+n T(n) = 4T\left(\frac{n}{4}\right) + n

a=4 a = 4 b=4 b = 4 f(n)=n f(n) = n n^(log _(b)a)=n^(log_(4)4)=n^(1)=n n^{\log_b a} = n^{\log_4 4} = n^1 = n - Since

f(n)=n=Theta(n^(log _(b)a)) f(n) = n = \Theta(n^{\log_b a}) - Solution:

T(n)=Theta(n^(log _(b)a)log n)=Theta(n log n) T(n) = \Theta(n^{\log_b a} \log n) = \Theta(n \log n)

🔹 (b) T(n)=4T((3n)/(4))+n T(n) = 4T\left(\frac{3n}{4}\right) + n

a=4 a = 4 b=(4)/(3) b = \frac{4}{3} f(n)=n f(n) = n n^(log _(b)a)=n^(log_(4//3)4) n^{\log_b a} = n^{\log_{4/3} 4}

Note:log_(4//3)4 > 1 \log_{4/3} 4 > 1 4//3 < 4 4/3 < 4 n^(log _(b)a) n^{\log_b a} n n - Specifically,

log_(4//3)4~~4.82 \log_{4/3} 4 \approx 4.82 n^(log _(b)a)~~n^(4.82) n^{\log_b a} \approx n^{4.82} - Since

f(n)=n=O(n^(log _(b)a-epsi)) f(n) = n = O(n^{\log_b a - \varepsilon}) epsi > 0 \varepsilon > 0 - Solution:

T(n)=Theta(n^(log_(4//3)4)) T(n) = \Theta(n^{\log_{4/3} 4})

✅ Final Answers:

- (a)

Theta(n log n) \Theta(n \log n) - (b)

Theta(n^(log_(4//3)4)) \Theta(n^{\log_{4/3} 4}) Theta(n^(4.82)) \Theta(n^{4.82})

Question:-2(a)

What is an Optimisation Problem? Explain with the help of an example. When would you use a Greedy Approach to solve optimisation problem? Formulate the Task Scheduling Problem as an optimisation problem and write a greedy algorithm to solve this problem. Also, solve the following fractional Knapsack problem using greedy approach. Show all the steps. Suppose there is a knapsack of capacity 20 Kg and the following 6 items are to be packed in it. The weight and profit of the items are as under:

Select a subset of the items that maximises the profit while keeping the total weight below or equal to the given capacity.

Answer:

🎯 Optimization Problem

An optimization problem involves finding the best solution from all feasible solutions, typically to maximize or minimize a function under constraints.

Example:

Finding the shortest path between two cities on a map.

Finding the shortest path between two cities on a map.

⚡ Greedy Approach

Use a greedy approach when:

- The problem has the greedy choice property (local optimal choices lead to global optimum).

- It has optimal substructure (solution to subproblems helps solve the main problem).

📅 Task Scheduling as Optimization

Problem: Schedule tasks to maximize the number of non-overlapping tasks.

Formulation:

Formulation:

- Each task has start and end times.

- Goal: Select maximum tasks without overlap.

Greedy Algorithm:

- Sort tasks by end time.

- Select first task.

- For each next task, if start ≥ last end time, select it.

🎒 Fractional Knapsack Problem

Capacity: 20 kg

Items:

Items:

| Item | Profit | Weight | Profit/Weight |

|---|---|---|---|

| 1 | 30 | 5 | 6.0 |

| 2 | 16 | 4 | 4.0 |

| 3 | 18 | 6 | 3.0 |

| 4 | 20 | 4 | 5.0 |

| 5 | 10 | 5 | 2.0 |

| 6 | 7 | 7 | 1.0 |

Greedy Steps:

- Sort by profit/weight (descending):

Order: Item1 (6.0), Item4 (5.0), Item2 (4.0), Item3 (3.0), Item5 (2.0), Item6 (1.0) - Add items fully until capacity allows:

- Item1: w=5, p=30 → cap=15, profit=30

- Item4: w=4, p=20 → cap=11, profit=50

- Item2: w=4, p=16 → cap=7, profit=66

- Item3: w=6 > cap=7? Add fraction: (7/6)*18 = 21 → profit=66+21=87, cap=0

Max Profit: 87 ✅

Question:-2(b)

Assuming that data to be transmitted consists of only characters ‘a’ to ‘g’, design the Huffman code for the following frequencies of character data. Show all the steps of building a Huffman tree. Also, show how a coded sequence using Huffman code can be decoded.

Answer:

🌳 Huffman Coding Steps

📊 Given Frequencies:

| Char | Freq |

|---|---|

| a | 5 |

| b | 25 |

| c | 10 |

| d | 15 |

| e | 8 |

| f | 7 |

| g | 30 |

🔨 Step 1: Build Huffman Tree

- Sort by frequency (ascending):

a:5, f:7, e:8, c:10, d:15, b:25, g:30 - Merge two smallest (a:5 + f:7 = 12):

New list:

e:8, c:10, 12, d:15, b:25, g:30

(Node: a+f → 12) - Merge next two (e:8 + c:10 = 18):

New list:

12, 18, d:15, b:25, g:30 - Merge 12 and d:15 = 27:

New list:

18, 27, b:25, g:30 - Merge 18 and b:25 = 43:

New list:

27, 43, g:30 - Merge 27 and g:30 = 57:

New list:

43, 57 - Merge 43 and 57 = 100 (root)

🧩 Huffman Tree Structure:

(100)

/ \

(43) (57)

/ \ / \

(18) (25)b (27) (30)g

/ \ / \

(8)e (10)c (12) (15)d

/ \

(5)a (7)f

🔤 Huffman Codes (Assign 0/0 to left/right):

- g: 11

- b: 01

- d: 101

- c: 001

- e: 000

- a: 1000

- f: 1001

🔍 Decoding a Coded Sequence

Example: Decode

11011001000 (Assume: g=11, b=01, d=101, etc.)- Start at root.

- Read bits:

11→ g01→ b10→ partial (d? Wait, d=101, so need more)101→ d000→ e

Decoded: g, b, d, e

✅ Summary:

- Huffman codes are variable-length, prefix-free.

- Decoding traverses tree until a leaf is reached.

Question:-2(c)

Explain the Merge procedure of the Merge Sort algorithm. Demonstrate the use of recursive Merge sort algorithm for sorting the following data of size 8: [19, 18, 16, 12, 11, 10, 9, 8]. Compute the complexity of Merge Sort algorithm.

Answer:

🔄 Merge Procedure in Merge Sort

The merge procedure combines two sorted subarrays into a single sorted array. It works by:

- Comparing the smallest elements of each subarray.

- Taking the smaller element and placing it in the result.

- Repeating until all elements are merged.

🧩 Example Merge:

Left: [12, 16, 18, 19]

Right: [8, 9, 10, 11]

Merged: [8, 9, 10, 11, 12, 16, 18, 19]

Right: [8, 9, 10, 11]

Merged: [8, 9, 10, 11, 12, 16, 18, 19]

🧠 Recursive Merge Sort on [19,18,16,12,11,10,9,8]

Step 1: Split recursively

- [19,18,16,12] and [11,10,9,8]

- [19,18] and [16,12]; [11,10] and [9,8]

- [19] and [18]; [16] and [12]; [11] and [10]; [9] and [8]

Step 2: Merge pairs

- Merge [19] & [18] → [18,19]

- Merge [16] & [12] → [12,16]

- Merge [11] & [10] → [10,11]

- Merge [9] & [8] → [8,9]

Step 3: Merge groups

- Merge [18,19] & [12,16] → [12,16,18,19]

- Merge [10,11] & [8,9] → [8,9,10,11]

Step 4: Final merge

- Merge [12,16,18,19] & [8,9,10,11] → [8,9,10,11,12,16,18,19]

⏱️ Complexity Analysis

- Time:

O(n log n) O(n \log n) - Space:

O(n) O(n)

✅ Sorted Array: [8,9,10,11,12,16,18,19]

Question:-2(d)

Explain the divide and conquer approach of multiplying two large integers. Compute the time complexity of this approach. Also, explain the binary search algorithm and find its time complexity.

Answer:

🧮 Divide and Conquer for Large Integer Multiplication

📦 Approach:

Split two n n x x y y

Then:

Recursively compute a*c a \cdot c a*d a \cdot d b*c b \cdot c b*d b \cdot d

⏱️ Time Complexity:

- Recurrence:

T(n)=4T(n//2)+O(n) T(n) = 4T(n/2) + O(n) - Using Master Theorem:

O(n^(log_(2)4))=O(n^(2)) O(n^{\log_2 4}) = O(n^2)

(Same as school method, but Karatsuba improves toO(n^(1.58)) O(n^{1.58})

🔍 Binary Search Algorithm

🎯 Steps:

- Start with sorted array.

- Compare target with middle element.

- If equal, return index.

- If target < middle, search left half.

- If target > middle, search right half.

- Repeat until found or subarray size becomes 0.

⏱️ Time Complexity:

- Each step halves the search space.

- Recurrence:

T(n)=T(n//2)+O(1) T(n) = T(n/2) + O(1) - Solution:

O(log n) O(\log n)

✅ Summary:

- Integer Multiplication:

O(n^(2)) O(n^2) - Binary Search:

O(log n) O(\log n)

Question:-2(e)

Explain the Topological sorting with the help of an example. Also, explain the algorithm of finding strongly connected components in a directed Graph.

Answer:

🔝 Topological Sorting

Topological sorting is a linear ordering of the vertices of a directed acyclic graph (DAG) such that for every directed edge u rarr v u \to v u u v v

📌 Example:

Consider a DAG with edges:

A rarr C A \to C B rarr C B \to C B rarr D B \to D C rarr E C \to E D rarr E D \to E

Valid topological orders:

A,B,C,D,E A, B, C, D, E B,A,D,C,E B, A, D, C, E

(Note:A A B B

⚙️ Algorithm (Kahn’s Algorithm):

- Compute in-degree (number of incoming edges) for each vertex.

- Enqueue all vertices with in-degree 0.

- While queue not empty:

- Dequeue a vertex

u u - For each neighbor

v v u u - Reduce in-degree of

v v - If in-degree becomes 0, enqueue

v v

- Reduce in-degree of

- Dequeue a vertex

🔄 Strongly Connected Components (SCCs)

A strongly connected component of a directed graph is a maximal subgraph where every vertex is reachable from every other vertex.

⚙️ Algorithm (Kosaraju’s Algorithm):

- Step 1: Perform DFS on the graph to compute finishing times.

- Step 2: Compute the transpose graph (reverse all edges).

- Step 3: Perform DFS on the transpose graph in decreasing order of finishing times.

Each DFS tree in step 3 is an SCC.

📌 Example:

Graph:

A rarr B A \to B B rarr C B \to C C rarr A C \to A C rarr D C \to D D rarr E D \to E E rarr D E \to D

SCCs:

{A,B,C} \{A, B, C\} {D,E} \{D, E\}

Question:-3(a)

Consider the following Graph:

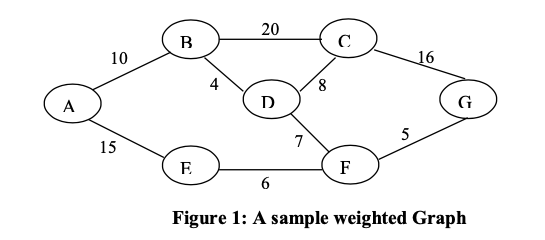

Write the Prim’s algorithm to find the minimum cost spanning tree of a graph. Also, find the time complexity of Prim’s algorithm. Demonstrate the use of Kruskal’s algorithm and Prim’s algorithm to find the minimum cost spanning tree for the Graph given in Figure 1. Show all the steps.

Answer:

Prim’s Algorithm for Minimum Spanning Tree (MST)

Prim’s algorithm is a greedy algorithm that finds the MST of a connected, undirected, weighted graph. It starts from an arbitrary vertex and grows the MST by repeatedly adding the smallest-weight edge that connects a vertex in the MST to a vertex outside of it.

Pseudocode

PrimMST(Graph G = (V, E), weights w: E → R, start_vertex s ∈ V):

// Initialize

MST = empty set // Set of edges in the MST

inMST = array of size |V|, initialized to False // Tracks vertices in MST

key = array of size |V|, initialized to infinity // Minimum weight to connect to MST

parent = array of size |V|, initialized to -1 // Parent in MST

key[s] = 0 // Start from s

// Use a priority queue (min-heap) to select minimum key vertex

PQ = priority_queue of all vertices, keyed by key values

while PQ is not empty:

u = extract_min(PQ) // Vertex with smallest key not in MST

inMST[u] = True

for each neighbor v of u:

if not inMST[v] and w(u, v) < key[v]:

key[v] = w(u, v)

parent[v] = u

update PQ with new key for v // Decrease key operation

// Construct MST edges from parent array (excluding root)

for each v in V except s:

add edge (parent[v], v) to MST with weight key[v]

return MST

How to Arrive at the Solution

- Initialization: Start with a single vertex (arbitrary choice) in the MST. Set its key to 0 and others to infinity.

- Growth: Use a priority queue to always pick the next vertex outside the MST with the smallest connecting edge. Update keys for its neighbors if a better edge is found.

- Termination: Continues until all vertices are included (|V| - 1 edges in MST).

- This ensures the MST is built by always choosing the locally optimal (smallest) safe edge, guaranteeing global optimality for undirected graphs with positive weights (by the greedy choice property).

Time Complexity

- With a binary heap for the priority queue and adjacency list representation:

- Extract-min: O(V log V) total (V extractions).

- Decrease-key: O(E log V) total (up to E updates).

- Overall: O((V + E) log V), which simplifies to O(E log V) since E ≥ V-1 in connected graphs.

- For dense graphs (E ≈ V²) using an adjacency matrix without heap: O(V²).

- The O(E log V) is common for sparse graphs.

Demonstration on the Given Graph

The graph has vertices: A, B, C, D, E, F, G.

Edges with weights:

Edges with weights:

- A-B: 10

- A-E: 15

- B-C: 20

- B-D: 4

- C-D: 8

- C-G: 16

- D-F: 7

- E-F: 6

- F-G: 5

It is connected and undirected.

Kruskal’s Algorithm Demonstration

Kruskal’s algorithm sorts all edges by increasing weight and adds them to the MST if they do not form a cycle (using Union-Find for cycle detection).

- Sort edges by weight:

(B-D: 4), (F-G: 5), (E-F: 6), (D-F: 7), (C-D: 8), (A-B: 10), (A-E: 15), (C-G: 16), (B-C: 20). - Initialize Union-Find: Each vertex is its own component: {A}, {B}, {C}, {D}, {E}, {F}, {G}.

- Add edges one by one:

- Add B-D: 4 (connects {B} and {D} → {B,D}; no cycle). MST: {B-D}, cost = 4.

- Add F-G: 5 (connects {F} and {G} → {F,G}; no cycle). MST: {B-D, F-G}, cost = 9.

- Add E-F: 6 (connects {E} and {F,G} → {E,F,G}; no cycle). MST: {B-D, F-G, E-F}, cost = 15.

- Add D-F: 7 (connects {B,D} and {E,F,G} → {B,D,E,F,G}; no cycle). MST: {B-D, F-G, E-F, D-F}, cost = 22.

- Add C-D: 8 (connects {C} and {B,D,E,F,G} → {B,C,D,E,F,G}; no cycle). MST: {B-D, F-G, E-F, D-F, C-D}, cost = 30.

- Add A-B: 10 (connects {A} and {B,C,D,E,F,G} → {A,B,C,D,E,F,G}; no cycle). MST: {B-D, F-G, E-F, D-F, C-D, A-B}, cost = 40.

- Skip A-E: 15 (A and E already connected; cycle).

- Skip C-G: 16 (C and G already connected; cycle).

- Skip B-C: 20 (B and C already connected; cycle).

- Final MST: Edges {A-B: 10, B-D: 4, C-D: 8, D-F: 7, E-F: 6, F-G: 5}. Total cost: 40.

How to Arrive at the Solution (Kruskal’s)

- Sorting ensures we consider smallest edges first.

- Union-Find efficiently checks cycles (find if roots are same) and merges components (union by rank/path compression for O(α(E)) amortized time, where α is inverse Ackermann).

- Stop after |V|-1 = 6 edges. The greedy choice avoids cycles while minimizing total weight.

Prim’s Algorithm Demonstration

Using the pseudocode above, starting from vertex A (arbitrary choice). We use a priority queue for minimum keys.

- Initialize:

- inMST: All False.

- key: All ∞ except key[A] = 0.

- parent: All -1.

- PQ: All vertices, min is A.

- Iterations:

- Extract A (key=0). inMST[A]=True. Update neighbors: key[B]=10 (parent[B]=A), key[E]=15 (parent[E]=A).

- Extract B (min key=10). inMST[B]=True. Update: key[D]=4 (parent[D]=B), key[C]=20 (parent[C]=B). (Ignore A.)

- Extract D (min key=4). inMST[D]=True. Update: key[C]=8 (better than 20, parent[C]=D), key[F]=7 (parent[F]=D). (Ignore B.)

- Extract F (min key=7). inMST[F]=True. Update: key[E]=6 (better than 15, parent[E]=F), key[G]=5 (parent[G]=F). (Ignore D.)

- Extract G (min key=5). inMST[G]=True. Update: key[C]=8 (no change). (Ignore F.)

- Extract E (min key=6). inMST[E]=True. Update: No better updates. (Ignore A, F.)

- Extract C (min key=8). inMST[C]=True. No better updates. (Ignore B, D, G.)

- Final MST: From parents: B←A (10), D←B (4), F←D (7), G←F (5), E←F (6), C←D (8). Edges {A-B: 10, B-D: 4, D-C: 8, D-F: 7, F-E: 6, F-G: 5}. Total cost: 40.

How to Arrive at the Solution (Prim’s)

- Start from A and grow the tree by always adding the cheapest edge to the fringe (priority queue handles this).

- Updates ensure each vertex's key is the minimum possible at each step.

- Different starting vertex (e.g., G) may yield a different tree but same cost, as the algorithm is correct for connected graphs.

Question:-3(b)

Write the Dijkstra’s shortest path algorithm. Also, find the time complexity of this shortest path algorithm. Find the shortest paths from the vertex ‘A’ using Dijkstra’s shortest path algorithm for the graph given in Figure 1. Show all the steps of computation.

Answer:

Dijkstra’s Shortest Path Algorithm

Dijkstra’s algorithm is a greedy algorithm that finds the shortest paths from a single source vertex to all other vertices in a connected, undirected or directed graph with non-negative edge weights. It maintains a set of vertices whose shortest paths are finalized and grows it by selecting the vertex with the minimum distance estimate.

Pseudocode

Dijkstra(Graph G = (V, E), weights w: E → R ≥ 0, source s ∈ V):

// Initialize

dist = array of size |V|, initialized to infinity // Shortest distance from s

prev = array of size |V|, initialized to -1 // Previous vertex in path

dist[s] = 0

// Priority queue (min-heap) for vertices not yet finalized, keyed by dist

PQ = priority_queue of all vertices, keyed by dist values

while PQ is not empty:

u = extract_min(PQ) // Vertex with smallest dist

for each neighbor v of u:

if dist[v] > dist[u] + w(u, v):

dist[v] = dist[u] + w(u, v)

prev[v] = u

update PQ with new dist for v // Decrease key operation

// To reconstruct path to a target t: backtrack from t using prev until s

return dist, prev

Time Complexity

- With a binary heap for the priority queue and adjacency list representation:

- Extract-min: O(V log V) total (V extractions).

- Decrease-key: O(E log V) total (up to E updates).

- Overall: O((V + E) log V), which simplifies to O(E log V) since E ≥ V-1 in connected graphs.

- For dense graphs (E ≈ V²) using a simple array for minimum selection (no heap): O(V²).

- The O(E log V) variant is common for sparse graphs and assumes a heap that supports efficient decrease-key (e.g., binary or Fibonacci heap; Fibonacci reduces to amortized O(E + V log V)).

Demonstration on the Given Graph: Shortest Paths from A

The graph has vertices A, B, C, D, E, F, G and the edges with weights as shown (undirected, so bidirectional). We apply Dijkstra’s from source A, showing step-by-step updates. We use a priority queue (min-heap) for the next vertex selection.

Initialization

- dist: [A: 0, B: ∞, C: ∞, D: ∞, E: ∞, F: ∞, G: ∞]

- prev: [all -1]

- PQ: All vertices (min keyed on dist; initially A at 0, others ∞)

Step-by-Step Computation

We tabulate the process: each iteration extracts the minimum dist vertex u, then relaxes (updates) its neighbors.

| Iteration | Extracted u (dist[u]) | Updates to Neighbors |

|---|---|---|

| 1 | A (0) | - B: dist[B] = 0 + 10 = 10 (prev[B] = A) - E: dist[E] = 0 + 15 = 15 (prev[E] = A) Current dist: [A:0, B:10, C:∞, D:∞, E:15, F:∞, G:∞] |

| 2 | B (10) | - C: dist[C] = 10 + 20 = 30 (prev[C] = B) - D: dist[D] = 10 + 4 = 14 (prev[D] = B) (A already extracted, ignored) Current dist: [A:0, B:10, C:30, D:14, E:15, F:∞, G:∞] |

| 3 | D (14) | - C: dist[C] = 14 + 8 = 22 (< 30, update; prev[C] = D) - F: dist[F] = 14 + 7 = 21 (prev[F] = D) (B already extracted, ignored) Current dist: [A:0, B:10, C:22, D:14, E:15, F:21, G:∞] |

| 4 | E (15) | - F: 15 + 6 = 21 (== 21, no update) (A already extracted, ignored) Current dist: [A:0, B:10, C:22, D:14, E:15, F:21, G:∞] |

| 5 | F (21) | - G: dist[G] = 21 + 5 = 26 (prev[G] = F) (D, E already extracted, ignored) Current dist: [A:0, B:10, C:22, D:14, E:15, F:21, G:26] |

| 6 | C (22) | - G: 22 + 16 = 38 (> 26, no update) (B, D already extracted, ignored) Current dist: [A:0, B:10, C:22, D:14, E:15, F:21, G:26] |

| 7 | G (26) | (C, F already extracted, ignored) Current dist: [A:0, B:10, C:22, D:14, E:15, F:21, G:26] |

PQ is now empty; all vertices processed.

Final Shortest Distances from A

- A: 0

- B: 10

- C: 22

- D: 14

- E: 15

- F: 21

- G: 26

Shortest Paths from A (Reconstructed using prev)

- To A: A (distance 0)

- To B: A → B (distance 10)

- To C: A → B → D → C (distance 10 + 4 + 8 = 22)

- To D: A → B → D (distance 10 + 4 = 14)

- To E: A → E (distance 15)

- To F: A → B → D → F (distance 10 + 4 + 7 = 21)

Note: Alternative path A → E → F (15 + 6 = 21) has the same distance; the algorithm found one via D. - To G: A → B → D → F → G (distance 10 + 4 + 7 + 5 = 26)

Note: Alternative A → E → F → G (15 + 6 + 5 = 26) equivalent.

How to Arrive at the Solution

- Initialization: Set source distance to 0, others to infinity.

- Relaxation: For each extracted u, check if a shorter path to v exists via u; update if so.

- Priority Queue: Ensures the next u is always the one with the current smallest dist (greedy choice).

- Correctness: Relies on non-negative weights; once a vertex is extracted, its dist is finalized (no shorter path can be found later).

- The steps guarantee optimality by the invariant: at each extraction, dist[u] is the true shortest path.

Question:-3(c)

Explain the algorithm to find the optimal Binary Search Tree. Demonstrate this algorithm to find the Optimal Binary Search Tree for the following probability data (where p_(i) p_i k_(i) k_i q_(i) q_i d_(i) d_i

Answer:

Algorithm to Find the Optimal Binary Search Tree

The optimal binary search tree (BST) problem involves constructing a BST that minimizes the expected search cost, given probabilities p_(i) p_i k_(i) k_i q_(i) q_i d_(i) d_i k_(1) < k_(2) < cdots < k_(n) k_1 < k_2 < \dots < k_n d_(0) d_0 k_(1) k_1 d_(n) d_n k_(n) k_n d_(i) d_i 1 <= i < n 1 \leq i < n k_(i) k_i k_(i+1) k_{i+1}

The expected search cost is defined as sum_(i=1)^(n)p_(i)("depth"(k_(i))+1)+sum_(i=0)^(n)q_(i)"depth"(d_(i)) \sum_{i=1}^n p_i (\text{depth}(k_i) + 1) + \sum_{i=0}^n q_i \text{depth}(d_i)

The algorithm uses dynamic programming:

- Let

e[i][j] e[i][j] k_(i) k_i k_(j) k_j 1 <= i <= j <= n 1 \leq i \leq j \leq n - Let

w[i][j] w[i][j] w[i][j]=sum_(l=i)^(j)p_(l)+sum_(l=i-1)^(j)q_(l) w[i][j] = \sum_{l=i}^j p_l + \sum_{l=i-1}^j q_l - Base cases:

- For empty subtrees (

j=i-1 j = i-1 e[i][i-1]=0 e[i][i-1] = 0 w[i][i-1]=0 w[i][i-1] = 0 i=1 i = 1 n+1 n+1

- For empty subtrees (

- Recurrence:

w[i][j]=w[i][j-1]+p_(j)+q_(j) w[i][j] = w[i][j-1] + p_j + q_j e[i][j]=min_(r=i)^(j)(e[i][r-1]+e[r+1][j]+w[i][j]) e[i][j] = \min_{r=i}^j \left( e[i][r-1] + e[r+1][j] + w[i][j] \right)

- To track the tree structure, record

"root"[i][j] \text{root}[i][j] r r - Compute in order of increasing subtree size

l=j-i+1 l = j - i + 1 n n - The optimal cost is

e[1][n] e[1][n]

Time Complexity

- The DP fills an

O(n^(2)) O(n^2) e[i][j] e[i][j] O(n) O(n) r r - Total:

O(n^(3)) O(n^3)

Demonstration for the Given Probabilities

Given n=4 n=4

p=[p_(1)=0.10,p_(2)=0.15,p_(3)=0.20,p_(4)=0.10] p = [p_1=0.10, p_2=0.15, p_3=0.20, p_4=0.10] q=[q_(0)=0.05,q_(1)=0.10,q_(2)=0.10,q_(3)=0.10,q_(4)=0.10] q = [q_0=0.05, q_1=0.10, q_2=0.10, q_3=0.10, q_4=0.10]

We assume the keys are k_(1),k_(2),k_(3),k_(4) k_1, k_2, k_3, k_4

Step-by-Step Computation

We compute w[i][j] w[i][j] e[i][j] e[i][j] l l

- Length

l=0 l=0 e[i][i-1]=0 e[i][i-1] = 0 w[i][i-1]=0 w[i][i-1] = 0 i=1 i=1 5 5 - Length

l=1 l=1 i=1,j=1 i=1, j=1 w[1][1]=0+0.10+0.10=0.25 w[1][1] = 0 + 0.10 + 0.10 = 0.25 e[1][1]=0+0+0.25=0.25 e[1][1] = 0 + 0 + 0.25 = 0.25 i=2,j=2 i=2, j=2 w[2][2]=0+0.15+0.10=0.35 w[2][2] = 0 + 0.15 + 0.10 = 0.35 e[2][2]=0.35 e[2][2] = 0.35 i=3,j=3 i=3, j=3 w[3][3]=0+0.20+0.10=0.40 w[3][3] = 0 + 0.20 + 0.10 = 0.40 e[3][3]=0.40 e[3][3] = 0.40 i=4,j=4 i=4, j=4 w[4][4]=0+0.10+0.10=0.30 w[4][4] = 0 + 0.10 + 0.10 = 0.30 e[4][4]=0.30 e[4][4] = 0.30

- Length

l=2 l=2 i=1,j=2 i=1, j=2 w[1][2]=0.25+0.15+0.10=0.50 w[1][2] = 0.25 + 0.15 + 0.10 = 0.50 - r=1:

0+0.35+0.50=0.85 0 + 0.35 + 0.50 = 0.85 - r=2:

0.25+0+0.50=0.75 0.25 + 0 + 0.50 = 0.75 e[1][2]=0.75 e[1][2] = 0.75

- r=1:

i=2,j=3 i=2, j=3 w[2][3]=0.35+0.20+0.10=0.65 w[2][3] = 0.35 + 0.20 + 0.10 = 0.65 - r=2:

0+0.40+0.65=1.05 0 + 0.40 + 0.65 = 1.05 - r=3:

0.35+0+0.65=1.00 0.35 + 0 + 0.65 = 1.00 e[2][3]=1.00 e[2][3] = 1.00

- r=2:

i=3,j=4 i=3, j=4 w[3][4]=0.40+0.10+0.10=0.60 w[3][4] = 0.40 + 0.10 + 0.10 = 0.60 - r=3:

0+0.30+0.60=0.90 0 + 0.30 + 0.60 = 0.90 - r=4:

0.40+0+0.60=1.00 0.40 + 0 + 0.60 = 1.00 e[3][4]=0.90 e[3][4] = 0.90

- r=3:

- Length

l=3 l=3 i=1,j=3 i=1, j=3 w[1][3]=0.50+0.20+0.10=0.80 w[1][3] = 0.50 + 0.20 + 0.10 = 0.80 - r=1:

0+1.00+0.80=1.80 0 + 1.00 + 0.80 = 1.80 - r=2:

0.25+0.40+0.80=1.45 0.25 + 0.40 + 0.80 = 1.45 - r=3:

0.75+0+0.80=1.55 0.75 + 0 + 0.80 = 1.55 e[1][3]=1.45 e[1][3] = 1.45

- r=1:

i=2,j=4 i=2, j=4 w[2][4]=0.65+0.10+0.10=0.85 w[2][4] = 0.65 + 0.10 + 0.10 = 0.85 - r=2:

0+0.90+0.85=1.75 0 + 0.90 + 0.85 = 1.75 - r=3:

0.35+0.30+0.85=1.50 0.35 + 0.30 + 0.85 = 1.50 - r=4:

1.00+0+0.85=1.85 1.00 + 0 + 0.85 = 1.85 e[2][4]=1.50 e[2][4] = 1.50

- r=2:

- Length

l=4 l=4 i=1,j=4 i=1, j=4 w[1][4]=0.80+0.10+0.10=1.00 w[1][4] = 0.80 + 0.10 + 0.10 = 1.00 - r=1:

0+1.50+1.00=2.50 0 + 1.50 + 1.00 = 2.50 - r=2:

0.25+0.90+1.00=2.15 0.25 + 0.90 + 1.00 = 2.15 - r=3:

0.75+0.30+1.00=2.05 0.75 + 0.30 + 1.00 = 2.05 - r=4:

1.45+0+1.00=2.45 1.45 + 0 + 1.00 = 2.45 e[1][4]=2.05 e[1][4] = 2.05

- r=1:

How to Arrive at the Solution

- DP Table Construction: Start with empty subtrees (cost 0). For each larger subtree, compute the probability mass

w[i][j] w[i][j] r r w[i][j] w[i][j] r r - Optimal Cost:

e[1][4]=2.05 e[1][4] = 2.05 - Tree Construction: Use the root table recursively:

- Root:

k_(3) k_3 - Left subtree (keys 1-2): Root

k_(2) k_2 - Left of

k_(2) k_2 k_(1) k_1 - Right of

k_(2) k_2

- Left of

- Right subtree (keys 4-4): Root

k_(4) k_4

- Root:

The optimal BST is:

- Root:

k_(3) k_3 - Left:

k_(2) k_2 - Left:

k_(1) k_1 - Right: None

- Left:

- Right:

k_(4) k_4

- Left:

This structure favors higher-probability keys (k_(3)=0.20 k_3 = 0.20 k_(2)=0.15 k_2 = 0.15

Question:-3(d)

Given the following sequence of chain multiplication of the matrices. Find the optimal way of multiplying these matrices:

Answer:

Algorithm to Find the Optimal Matrix Chain Multiplication

The matrix chain multiplication problem involves finding the most efficient way to multiply a sequence of matrices A_(1),A_(2),dots,A_(n) A_1, A_2, \dots, A_n A[p xx q] A[p \times q] B[q xx r] B[q \times r] p xx q xx r p \times q \times r

This is solved using dynamic programming:

- Let

m[i][j] m[i][j] A_(i)*A_(i+1)*cdots*A_(j) A_i \cdot A_{i+1} \cdot \dots \cdot A_j 1 <= i <= j <= n 1 \leq i \leq j \leq n - Let

s[i][j] s[i][j] k k A_(i)*cdots*A_(k) A_i \cdot \dots \cdot A_k A_(k+1)*cdots*A_(j) A_{k+1} \cdot \dots \cdot A_j - Dimensions are given as

d[0],d[1],dots,d[n] d[0], d[1], \dots, d[n] d[i-1]xx d[i] d[i-1] \times d[i] A_(i) A_i A_(i)*A_(i+1)*cdots*A_(j) A_i \cdot A_{i+1} \cdot \dots \cdot A_j k k - Recurrence:

m[i][i]=0 m[i][i] = 0 m[i][j]=min_(i <= k < j)(m[i][k]+m[k+1][j]+d[i-1]*d[k]*d[j]) m[i][j] = \min_{i \leq k < j} \left( m[i][k] + m[k+1][j] + d[i-1] \cdot d[k] \cdot d[j] \right) j > i j > i

- Compute for increasing chain lengths

l=j-i+1 l = j - i + 1 n n - The optimal cost is

m[1][n] m[1][n] s s

Time Complexity

- The DP table is

O(n^(2)) O(n^2) m[i][j] m[i][j] O(n) O(n) - Total:

O(n^(3)) O(n^3)

Demonstration for the Given Matrices

Given matrices:

A_(1) A_1 A_(2) A_2 A_(3) A_3 A_(4) A_4

Dimensions array d d d[i-1]xx d[i] d[i-1] \times d[i] A_(i) A_i

Step-by-Step Computation

We compute m[i][j] m[i][j] s[i][j] s[i][j] l l

- Length

l=1 l=1 m[i][i]=0 m[i][i] = 0 i=1 i = 1 s[i][i] s[i][i]

- Length

l=2 l=2 i=1,j=2 i=1, j=2 d[0]*d[1]*d[2]=10*15*5=750 d[0] \cdot d[1] \cdot d[2] = 10 \cdot 15 \cdot 5 = 750 m[1][2]=m[1][1]+m[2][2]+750=0+0+750=750 m[1][2] = m[1][1] + m[2][2] + 750 = 0 + 0 + 750 = 750 s[1][2]=1 s[1][2] = 1 k=1 k=1

i=2,j=3 i=2, j=3 d[1]*d[2]*d[3]=15*5*20=1500 d[1] \cdot d[2] \cdot d[3] = 15 \cdot 5 \cdot 20 = 1500 m[2][3]=1500 m[2][3] = 1500 s[2][3]=2 s[2][3] = 2

i=3,j=4 i=3, j=4 d[2]*d[3]*d[4]=5*20*10=1000 d[2] \cdot d[3] \cdot d[4] = 5 \cdot 20 \cdot 10 = 1000 m[3][4]=1000 m[3][4] = 1000 s[3][4]=3 s[3][4] = 3

- Length

l=3 l=3 i=1,j=3 i=1, j=3 d[0]*d[2]*d[3]=10*5*20=1000 d[0] \cdot d[2] \cdot d[3] = 10 \cdot 5 \cdot 20 = 1000 k=1 k=1 m[1][1]+m[2][3]+1000=0+1500+1000=2500 m[1][1] + m[2][3] + 1000 = 0 + 1500 + 1000 = 2500 k=2 k=2 m[1][2]+m[3][3]+1000=750+0+1000=1750 m[1][2] + m[3][3] + 1000 = 750 + 0 + 1000 = 1750 m[1][3]=1750 m[1][3] = 1750 s[1][3]=2 s[1][3] = 2

i=2,j=4 i=2, j=4 d[1]*d[3]*d[4]=15*20*10=3000 d[1] \cdot d[3] \cdot d[4] = 15 \cdot 20 \cdot 10 = 3000 k=2 k=2 m[2][2]+m[3][4]+3000=0+1000+3000=4000 m[2][2] + m[3][4] + 3000 = 0 + 1000 + 3000 = 4000 k=3 k=3 m[2][3]+m[4][4]+3000=1500+0+3000=4500 m[2][3] + m[4][4] + 3000 = 1500 + 0 + 3000 = 4500 m[2][4]=4000 m[2][4] = 4000 s[2][4]=2 s[2][4] = 2

- Length

l=4 l=4 i=1,j=4 i=1, j=4 d[0]*d[3]*d[4]=10*20*10=2000 d[0] \cdot d[3] \cdot d[4] = 10 \cdot 20 \cdot 10 = 2000 k=1 k=1 m[1][1]+m[2][4]+2000=0+4000+2000=6000 m[1][1] + m[2][4] + 2000 = 0 + 4000 + 2000 = 6000 k=2 k=2 m[1][2]+m[3][4]+2000=750+1000+2000=3750 m[1][2] + m[3][4] + 2000 = 750 + 1000 + 2000 = 3750 k=3 k=3 m[1][3]+m[4][4]+2000=1750+0+2000=3750 m[1][3] + m[4][4] + 2000 = 1750 + 0 + 2000 = 3750 m[1][4]=3750 m[1][4] = 3750 s[1][4]=2 s[1][4] = 2

Optimal Parenthesization

m[1][4]=3750 m[1][4] = 3750 - Reconstruct using

s s s[1][4]=2 s[1][4] = 2 A_(2) A_2 - Left:

A_(1)*A_(2) A_1 \cdot A_2 s[1][2]=1 s[1][2] = 1 - Right:

A_(3)*A_(4) A_3 \cdot A_4 s[3][4]=3 s[3][4] = 3 - Final multiplication:

(A_(1)*A_(2))*(A_(3)*A_(4)) (A_1 \cdot A_2) \cdot (A_3 \cdot A_4)

How to Arrive at the Solution

- DP Approach: Build costs bottom-up, considering all possible splits. The cost includes previous subproblems plus the multiplication cost.

- Optimality: The recurrence ensures the minimum by trying all

k k - The chosen split minimizes the total scalar multiplications, validated by the computed

m[1][4] m[1][4]

Question:-3(e)

Explain the Rabin Karp algorithm for string matching with the help of an example. Find the time complexity of this algorithm.

Answer:

Rabin-Karp Algorithm for String Matching

The Rabin-Karp algorithm is a string-searching algorithm that uses hashing to efficiently find occurrences of a pattern (substring) within a larger text. It avoids comparing every possible substring character-by-character by instead computing hash values for the pattern and sliding windows of the text. If the hash of a text window matches the pattern's hash, a full character comparison is performed to confirm a match (to handle potential hash collisions).

Key Concepts

- Hashing: Treat strings as numbers in a large base (e.g., base

d=256 d = 256 - Rolling Hash: Efficiently update the hash for the next window in constant time, rather than recomputing from scratch. This is done by subtracting the contribution of the outgoing character and adding the incoming one.

- Modulo Operation: Use a large prime modulus

q q - Handling Collisions: Hashes may collide (different strings with same hash), so always verify matches with direct comparison.

Steps of the Algorithm

- Let

n n T T m m P P m <= n m \leq n - Choose a base

d d q q - Precompute

d^(m-1)modq d^{m-1} \mod q - Compute the hash of the pattern:

p_hash=(P[0]*d^(m-1)+P[1]*d^(m-2)+cdots+P[m-1]*d^(0))modq p\_hash = (P[0] \cdot d^{m-1} + P[1] \cdot d^{m-2} + \dots + P[m-1] \cdot d^0) \mod q - Compute the initial hash of the first

m m

t_hash=(T[0]*d^(m-1)+T[1]*d^(m-2)+cdots+T[m-1]*d^(0))modq t\_hash = (T[0] \cdot d^{m-1} + T[1] \cdot d^{m-2} + \dots + T[m-1] \cdot d^0) \mod q - If

p_hash==t_hash p\_hash == t\_hash T[0..m-1] T[0..m-1] P P - For each subsequent window

i=1 i = 1 n-m n-m - Update

t_hash t\_hash t_hash=(d*(t_hash-T[i-1]*d^(m-1))+T[i+m-1])modq t\_hash = (d \cdot (t\_hash - T[i-1] \cdot d^{m-1}) + T[i+m-1]) \mod q

(Handle negative values by addingq q - If

p_hash==t_hash p\_hash == t\_hash T[i..i+m-1] T[i..i+m-1] P P i i

- Update

Example

Consider the text T="ABCDABCAB" T = "ABCDABCAB" P="ABC" P = "ABC" n=9 n=9 m=3 m=3 d=10 d=10 q=13 q=13 d d q q

- Precompute

d^(m-1)=10^(2)=100mod13=100-7**13=100-91=9 d^{m-1} = 10^2 = 100 \mod 13 = 100 - 7*13 = 100-91=9 - Pattern hash (

P="ABC" P = "ABC"

p_hash=(65*10^(2)+66*10^(1)+67*10^(0))mod13=(6500+660+67)=7227mod13 p\_hash = (65 \cdot 10^2 + 66 \cdot 10^1 + 67 \cdot 10^0) \mod 13 = (6500 + 660 + 67) = 7227 \mod 13

7227 ÷ 13 = 555*13=7215, remainder 12. Sop_hash=12 p\_hash = 12 - Initial text window "ABC":

t_hash=(65*100+66*10+67)=7227mod13=12 t\_hash = (65 \cdot 100 + 66 \cdot 10 + 67) = 7227 \mod 13 = 12

Hashes match (12==12). Compare strings: "ABC" == "ABC" → Match at index 0. - Next window (i=1): "BCD"

Update:t_hash=(10*(12-65*9)+68)mod13 t\_hash = (10 \cdot (12 - 65 \cdot 9) + 68) \mod 13

First, 659=585 mod 13: 585-4513=585-585=0, so 12 - 0 =12.

1012=120 +68=188 mod13: 188-1413=188-182=6.

"BCD" hash=6 ≠12 → No match. - Next (i=2): "CDA"

Update from previous: Subtract C's contrib (679 mod13:679=603,603-46*13=603-598=5), so 6-5=1, then 10=10 +65=75 mod13=75-513=75-65=10 ≠12. - Next (i=3): "DAB" → Continues, no match.

- Next (i=4): "ABC" → Hash will compute to 12, match at index 4 (after update steps).

- And so on. Matches found at 0 and 4.

In a real implementation, characters are treated as integers, and larger primes reduce collisions.

Time Complexity

- Preprocessing: O(m) to compute pattern hash and initial text hash.

- Sliding Window: O(n - m + 1) updates, each O(1) time.

- Verifications: In the average case, few collisions, so O(m) per true match, leading to overall average O(n + m).

- Worst Case: If every hash matches (many collisions), verify all n-m+1 windows, each O(m), so O((n - m + 1) * m) ≈ O(nm). With a good hash (large prime), collisions are rare, making average close to O(n + m).

Question:-4(a)

Explain the term Decision problem with the help of an example. Define the following problems and identify if they are decision problem or optimisation problem? Give reasons in support of your answer.

(i) Travelling Salesman Problem

(ii) Graph Colouring Problem

(iii) 0-1 Knapsack Problem

(ii) Graph Colouring Problem

(iii) 0-1 Knapsack Problem

Answer:

❓ Decision Problem

A decision problem is a question with a yes/no answer.

Example:

"Is there a path from node A to node B in a graph with length ≤ k?"

Example:

"Is there a path from node A to node B in a graph with length ≤ k?"

🧳 (i) Travelling Salesman Problem (TSP)

- Standard form: Find the shortest route visiting each city exactly once and returning to the start.

- This is an optimization problem (minimize route length).

- Decision version: "Is there a route of length ≤ k?"

- This is a decision problem.

Reason: The core question seeks a minimum value, but it can be framed as a decision problem by asking if a solution exists within a bound.

🎨 (ii) Graph Colouring Problem

- Standard form: Assign colours to vertices so no adjacent vertices share a colour, using the fewest colours.

- This is an optimization problem (minimize colours).

- Decision version: "Can the graph be coloured with k colours?"

- This is a decision problem.

Reason: The goal is to minimize colours, but it is often treated as a decision problem for complexity analysis (e.g., k-colouring).

🎒 (iii) 0-1 Knapsack Problem

- Standard form: Select items to maximize profit without exceeding weight capacity.

- This is an optimization problem (maximize profit).

- Decision version: "Is there a subset of items with profit ≥ P and weight ≤ W?"

- This is a decision problem.

Reason: The natural form seeks maximum profit, but it can be converted to a decision problem by introducing a profit threshold.

✅ Summary:

- All three are optimization problems in their standard form.

- Each has a decision version used in computational complexity theory (e.g., NP-completeness).

- Decision problems are easier to analyze in terms of complexity classes.

Question:-4(b)

What are P and NP class of Problems? Explain each class with the help of at least two examples.

Answer:

🧠 P and NP Classes of Problems

🟢 Class P (Polynomial Time)

Definition: Problems that can be solved by a deterministic Turing machine in polynomial time (O(n^(k)) O(n^k) k k

Examples:

- Sorting a list

- Algorithms like Merge Sort run in

O(n log n) O(n \log n)

- Algorithms like Merge Sort run in

- Finding the shortest path in a graph (Dijkstra's algorithm)

- Runs in

O(V^(2)) O(V^2) O(E+V log V) O(E + V \log V)

- Runs in

🔴 Class NP (Nondeterministic Polynomial Time)

Definition: Problems whose solutions can be verified in polynomial time by a deterministic Turing machine. Note: NP does NOT mean "not polynomial"; it means "nondeterministic polynomial".

Examples:

- Boolean Satisfiability (SAT)

- Given a Boolean formula, is there an assignment of variables that makes it true?

- Verification: Check if a given assignment satisfies the formula in linear time.

- Traveling Salesman Problem (Decision Version)

- Is there a route of length ≤ k?

- Verification: Check if a given route visits all cities and has length ≤ k in polynomial time.

🔑 Key Points:

- P ⊆ NP (If you can solve it, you can verify it).

- Open Question: Is P = NP? (One of the Millennium Prize Problems).

- NP includes problems that are "easy" to verify but may be "hard" to solve.

📊 Comparison:

| Class | Solvable in Poly-Time | Verifiable in Poly-Time | Examples |

|---|---|---|---|

| P | Yes | Yes | Sorting, Shortest Path |

| NP | Unknown | Yes | SAT, TSP |

Understanding P and NP is crucial for complexity theory and cryptography! 🎯

Question:-4(c)

Define the NP-Hard and NP-Complete problem. How are they different from each other. Explain the use of polynomial time reduction with the help of an example.

Answer:

🧩 NP-Hard and NP-Complete Problems

🔴 NP-Hard

A problem is NP-hard if it is at least as hard as the hardest problems in NP. This means any problem in NP can be reduced to it in polynomial time. NP-hard problems may not be in NP themselves.

Example:

- Halting Problem (undecidable, but NP-hard)

- Traveling Salesman Problem (optimization version)

🟠 NP-Complete

A problem is NP-complete if it is:

- In NP (verifiable in polynomial time).

- NP-hard (all NP problems reduce to it).

Examples:

- Boolean Satisfiability (SAT)

- Graph Coloring

- Subset Sum

🔍 Key Differences:

| NP-Hard | NP-Complete |

|---|---|

| May not be in NP | Must be in NP |

| Includes optimization problems | Typically decision problems |

| e.g., Halting Problem | e.g., 3-SAT |

⏱️ Polynomial-Time Reduction

A reduction transforms problem A to problem B in polynomial time such that solving B solves A. Used to prove NP-hardness.

Example: Reducing 3-SAT to Graph Coloring:

- Show that if we can solve Graph Coloring quickly, we can solve 3-SAT quickly.

- Construct a graph from a 3-CNF formula where coloring the graph with k colors implies a satisfying assignment.

This proves Graph Coloring is NP-hard if 3-SAT is NP-hard.

Question:-4(d)

Define the following Problems:

(i) SAT Problem

(ii) Clique Problem

(iii) Hamiltonian Cycle Problem

(iv) Subset Sum Problem

(ii) Clique Problem

(iii) Hamiltonian Cycle Problem

(iv) Subset Sum Problem

Answer:

📌 (i) SAT Problem (Boolean Satisfiability Problem)

Definition: Given a Boolean formula (e.g., in conjunctive normal form), is there an assignment of truth values to variables that makes the entire formula true?

Example:

Formula:(x_(1)vv notx_(2))^^(notx_(1)vvx_(3)) (x_1 \lor \neg x_2) \land (\neg x_1 \lor x_3)

Is there values forx_(1),x_(2),x_(3) x_1, x_2, x_3 x_(1)="true",x_(2)="false",x_(3)="true" x_1 = \text{true}, x_2 = \text{false}, x_3 = \text{true}

Formula:

Is there values for

👥 (ii) Clique Problem

Definition: A clique is a complete subgraph (every pair of vertices connected). The decision problem: Does a graph contain a clique of size k k

Example:

In a social network, is there a group ofk k

In a social network, is there a group of

🔁 (iii) Hamiltonian Cycle Problem

Definition: Does a graph contain a cycle that visits every vertex exactly once and returns to the start?

Example:

Can a salesperson visit each city exactly once and return home?

Can a salesperson visit each city exactly once and return home?

💰 (iv) Subset Sum Problem

Definition: Given a set of integers and a target sum T T T T

Example:

Set:{3,7,2,8} \{3, 7, 2, 8\}

Set: